Using MCP Services

After configuring MCP services, you can use the corresponding MCP services within the agent's Output Node and Blank Node.

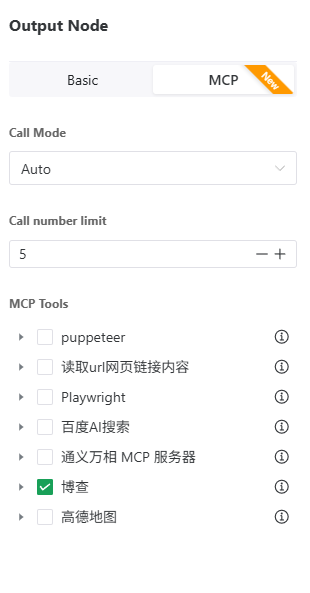

Click on the corresponding node, and in the node properties you will see an MCP tab where you can configure the MCP services that the node can use.

💡 Note: Since MCP services are automatically called by the large language model (LLM), the performance of the MCP service depends decisively on the LLM and your prompts. Not all large language models are suitable for using MCP services. It is recommended to use highly capable LLMs, such as DeepSeek-v3 or GPT 4.1. Also, if the LLM does not call the MCP service as expected at first, do not be discouraged—try adjusting your prompts to find a way for the LLM to accurately call the MCP service.

Call Modes

MCP services have two Call modes:

Auto: The LLM automatically determines whether there is a need to call the MCP service (corresponding to the "auto" setting in OpenAI Function Call), which may result in no call or multiple calls.

Required: Requires that the LLM invoke the most appropriate MCP service at least once (corresponding to the "required" setting in OpenAI Function Call). However, the exact behavior still depends on how the chosen LLM processes the call, and there is no guarantee that it will definitely call the service.

Maximum Number of MCP Calls Per Single Answer

Limits the number of times the LLM can call the MCP service during a single answer.

MCP Tools

Allows you to choose from the available MCP services and, under them, specific tools.

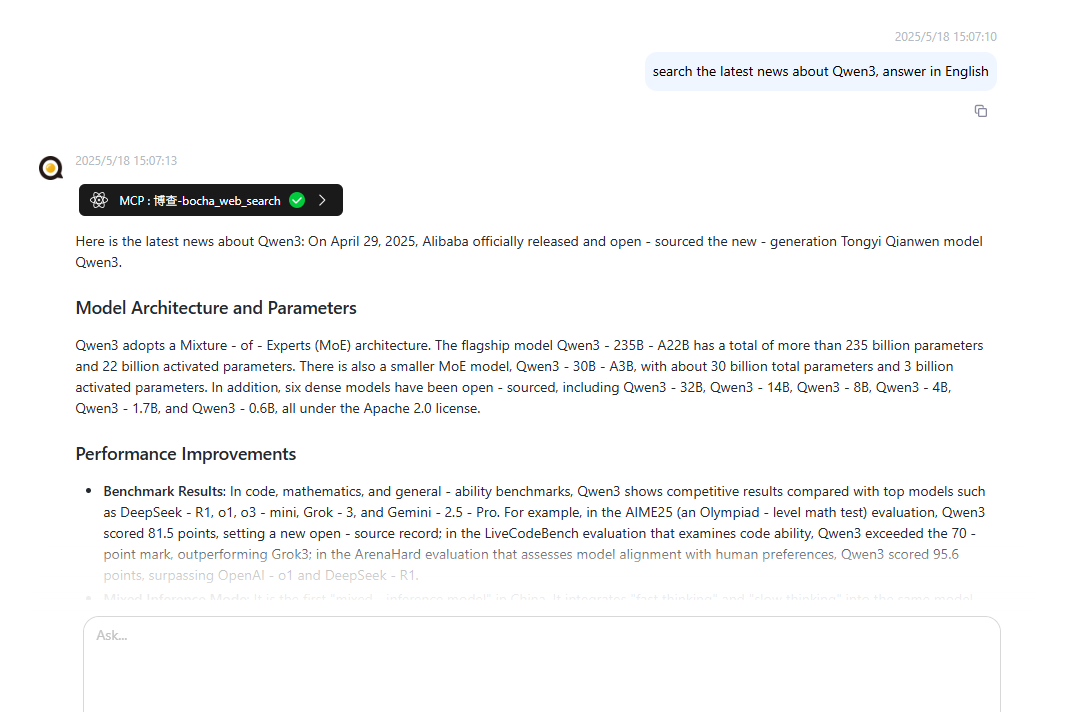

Once the MCP services and tools are configured on the corresponding node, when interacting with the agent, the LLM will be able to call the appropriate MCP services and tools to better answer questions.