MCP

Overview

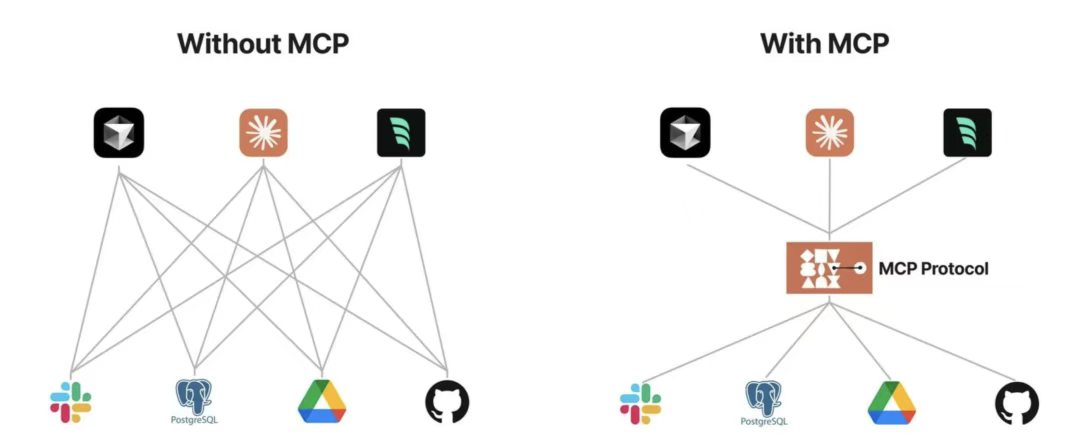

MCP (Model Context Protocol) is an open protocol that standardizes the way applications provide context to LLMs. You can think of MCP as the USB-C port for AI applications. Just as USB-C provides a standardized way to connect devices to various peripherals and accessories, MCP provides a standardized method for connecting AI models to different data sources and tools.

At the current stage, many MCP applications are still implemented based on function calls. Considering cost and applicability, this is clearly the optimal option, objectively reflecting that MCP and function calls are not competitors in terms of positioning. MCP aims to provide a protocol paradigm for AI applications, and its innovation lies in constructing a layered decoupling architecture for AI tool interactions.

The basic call flow is as follows:

- The AI software packages the user's question + available tools into a context and sends it to the LLM (a model that supports function calls);

- The LLM then analyzes the situation and generates the name of the function to be called + the required parameters as its model output;

- The API packages it into an actual function call, and the return result is added back to the context;

- The LLM determines whether additional tools are needed based on the final content, until the LLM decides that all necessary information has been acquired, and finally produces the answer expected by the user.

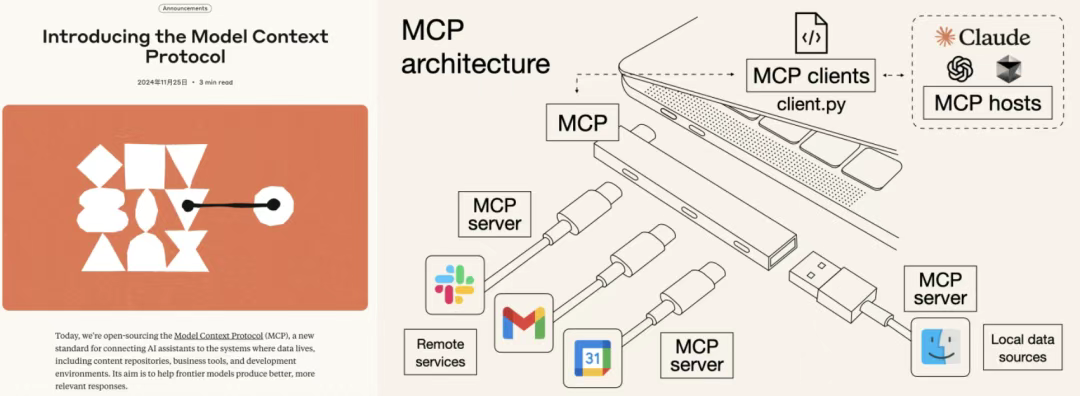

The Basic Architecture of MCP

The official MCP documentation divides the basic structure of the architecture into:

- MCP Hosts: LLM applications that initiate requests (for example, Claude Desktop, JianDan Intelligent Agent).

- MCP Clients: Within the host program, these maintain a 1:1 connection with the MCP server.

- MCP Servers: Provide context, tools, and prompt information for the MCP client.

- Local Data Sources: Resources or services on the local computer that the MCP server can access securely.

- Remote Services: Remote resources that the MCP server can connect to (for example, via APIs).