OpenAI Compatible API Call

The Gendial platform allows intelligent agents to be directly called by other software or OpenAI libraries through the OpenAI-compatible API. You can easily integrate your created Gendial intelligent agents into open-source software like Chatbox or cherryStudio. You can also integrate the capabilities of Gendial intelligent agents into existing business systems via API.

Below are the key parameters for the corresponding OpenAI API:

Base URL

Model Parameter

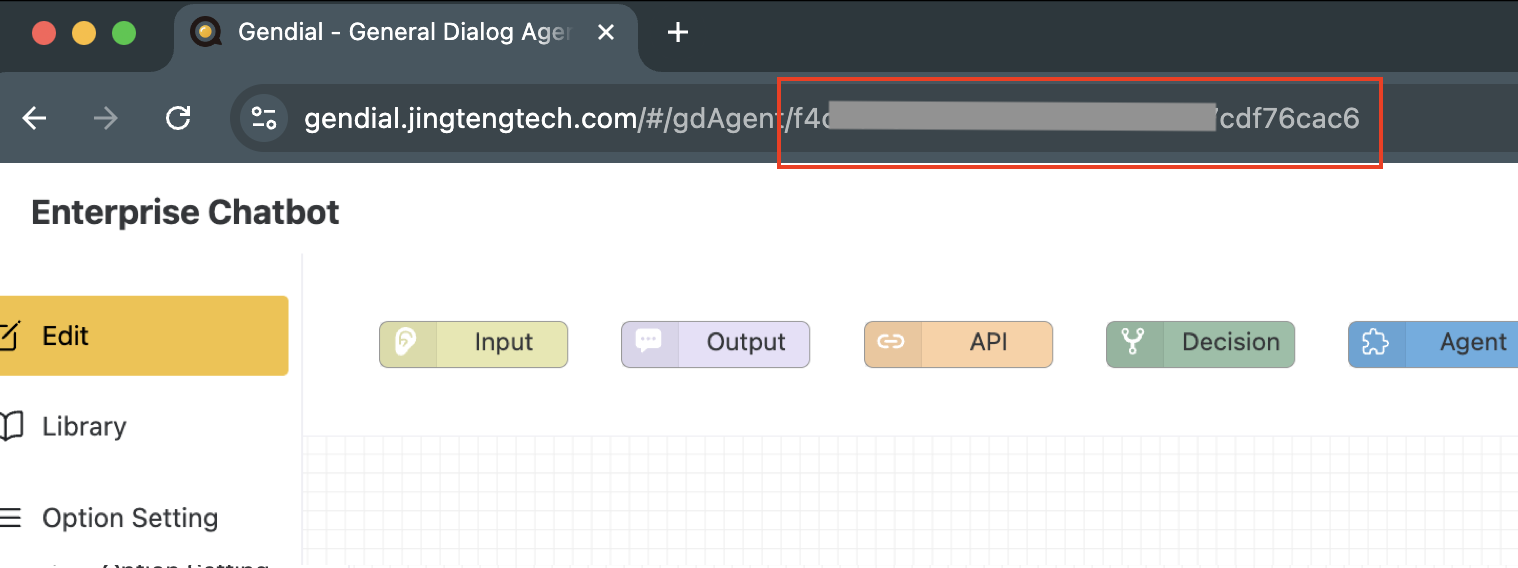

The model parameter is the GUID of the intelligent agent, which can be found in the intelligent agent browser:

API Key Parameter

The apiKey is the API key of the intelligent agent:

Other OpenAI Parameters

The intelligent agent API supports other parameters defined by the OpenAI API. Common parameters include:

max_tokens: Maximum response length (in tokens) for the model. This length is also limited by the model's context window.

stream: Whether to enable streaming output. This setting must align with the streaming output configuration of the intelligent agent’s output node to function properly. If the agent does not support streaming, setting stream to true in the API parameters will not enable streaming output.

temperature: Sampling temperature. Controls the smoothness of the probability distribution for each candidate word during text generation. The range is [0, 1]. When set to 0, the model only considers the token with the highest log probability. Higher values (e.g., 0.8) make the output more random, while lower values (e.g., 0.2) make it more focused and deterministic. It is generally recommended to adjust either temperature or top_p, but not both.

top_p: Nucleus sampling probability threshold. The model will consider tokens within the top_p probability mass. The range is [0, 1]. When set to 0, the model only considers the token with the highest log probability. A value of 0.1 means only the top 10% of tokens are considered, with larger values leading to more randomness in output, and smaller values leading to higher similarity. It is generally recommended to adjust either temperature or top_p, but not both.

⚠️ Note: The tools parameter is not supported.

Standard OpenAI Request Example

Here’s an example of a request using the curl command

curl https://gendial.cn/api/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer API_KEY \

-d '{

"model": AGENT_GUID,

"messages": [

{

"role": "system",

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "Hello!"

}

]

}'Gendial Platform Extended Parameters

In addition to the standard OpenAI parameters, API calls also support the following parameters to enhance the platform's intelligent agent capabilities.

memoryId Parameter

The memoryId is used to track the conversation history and the current state of the intelligent agent. When calling the API, you can pass the memoryId parameter to identify the conversation history. Each response from the intelligent agent will return a memoryId, which can be reused in subsequent requests.

When combined with OpenAI API standards, there are a few scenarios:

Not Using memoryId: This is the standard OpenAI API call mode where the client needs to manage the conversation history and send it via the

messagesfield. Each call is treated as a new conversation, starting from the beginning node, and a newmemoryIdis returned each time. This mode is suitable for simple, non-cyclic question-and-answer models.Using memoryId: In this case, the client does not send a

memoryIdfor the first conversation. After receiving thememoryIdfrom the intelligent agent, the client records it. In subsequent conversations, the client sends thememoryIdso the intelligent agent knows the conversation history and the current node state. To start a new conversation, simply omit thememoryId.

When using memoryId, the client doesn't need to organize the conversation history. The messages field only needs to include the user's message, and the intelligent agent will retrieve the stored conversation history for processing. The number of conversation history records the intelligent agent can use is configurable in the agent settings.

In conversations with complex workflows that involve loops, it is crucial to use the memoryId correctly. Otherwise, every conversation will start from scratch, and the intelligent agent won't function properly.

imageUrl Parameter

If the intelligent agent or large model supports image-based multimodal capabilities, the imageUrl parameter can be passed through the API. This parameter is consistent with the image_url field in the OpenAI API specification, and can be passed via imageUrl or included in messages as image_url.

fileUrl Parameter

If the intelligent agent supports file-based multimodal capabilities, the fileUrl parameter can be passed. The URL must be accessible by the platform. After passing this parameter, the intelligent agent can reference the file using the system variable sys.fileUrl for tasks such as downloading and reading.

originalFileName Parameter

When the intelligent agent supports file-based multimodal capabilities, the originalFileName parameter can be passed along with the fileUrl parameter. This allows the intelligent agent to reference the original file name using the system variable sys.fileName.

variables Parameter

In addition to the standard OpenAI API, the Gendial intelligent agent API also supports the variables parameter. This parameter is used to set runtime variables that control the intelligent agent's workflow and behavior via the API.

The format for variables is a string dictionary object:

{"key1": "value1","key2": "value2",..,"keyN": "valueN"}Gendial Extended API Call Example

curl https://gendial.cn/api/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer API_KEY \

-d '{

"model": AGENT_GUID,

"memoryId": YOUR_MEMORY_ID,

"messages": [

{

"role": "user",

"content": "Hello!"

}

],

"fileUrl": YOUR_FILE_URL,

"originalFileName": FILE_NAME,

"variables": {

"var1": "value1",

"var2": "value2",

}

}'